Generative Output Vetting (G.O.V): Raising the Bar for Safer, Responsible AI

A Practical Framework for Mitigating AI Output Risks in High-Stakes Domains

Tony Tudor

10/13/20255 min read

Why We Need G.O.V Now

As generative AI becomes central to digital products and decision-making, its output risks face intense scrutiny. Generative Output Vetting (G.O.V) is a structured, multi-layered approach to mitigate these risks. This post defines G.O.V, unpacks the escalating urgency for output vetting—especially in sensitive areas like healthcare—and outlines practical steps for implementation, including addressing AI pitfalls and the snowball effect of errors.

1. The Known Issues with AI Outputs

Despite rapid advances, generative AI systems remain prone to well-known flaws:

Hallucinations: AI models often fabricate facts, references, or even entire narratives, presenting them with unwarranted confidence.

Biases: Trained on vast, un-curated datasets, models can propagate or amplify social, cultural, or systemic biases embedded in their training data.

Ethical Risks: Inappropriate, offensive, or otherwise unethical outputs can slip through, especially in unsupervised or lightly monitored deployments.

Contextual Misunderstandings: AI models may misinterpret nuanced user intent, leading to outputs that are irrelevant, misleading, or even harmful.

These issues are not theoretical—they are frequently observed in production systems, from chatbots giving medical advice to automated content moderation tools making unfair decisions. Mitigating these risks requires more than model-level tweaks; it demands a systematic, output-focused approach.

2. AI Integration and the Snowball Effect

The stakes are rising. As AI-generated outputs are woven deeper into product workflows and automated decision-making, their influence multiplies. A single erroneous output can trigger a cascade of downstream errors—a phenomenon known as the snowball effect. When AI outputs are used as inputs for further automation, reporting, or human decisions, any initial mistake is propagated and potentially amplified at every subsequent step.

For example, in a healthcare application, an AI-generated patient summary containing a subtle misdiagnosis may be reviewed by another algorithm for treatment recommendations, then presented to clinicians, and finally impact patient care. Each layer increases the risk, scale, and complexity of the resulting harm.

3. Magnitude of Potential Impact: Why G.O.V Matters

The consequences of unchecked AI outputs are particularly acute in domains where the cost of error is high:

Healthcare: Misinformation can lead to misdiagnosis, inappropriate treatment, or even patient harm.

Product Workflows: Compounding errors can undermine user trust, damage brand reputation, and expose organizations to legal and ethical liabilities.

Finance: Faulty outputs can drive poor investment decisions, regulatory breaches, or fraud detection failures.

Military: Erroneous AI outputs can impact mission-critical decisions, with potentially catastrophic results.

Politics: Misinformation or biased outputs can sway public opinion, impact elections, or foster polarization.

As AI’s reach extends, so too does the magnitude of its potential negative impacts. The imperative for robust output vetting is clear.

4. Why Existing AI Engines Fall Short

Despite ongoing improvements, even the most advanced AI engines exhibit persistent, engine-specific shortcomings. Model architectures evolve, but core vulnerabilities—hallucinations, bias, ethical lapses—endure. No single model or vendor can guarantee comprehensive reliability, especially in real-world, high-stakes deployments. Relying solely on model-level safeguards or post-hoc human review is insufficient and unsustainable at scale.

Introducing Generative Output Vetting (G.O.V)

Generative Output Vetting (G.O.V) is a formalized, multi-layered process that systematically evaluates, cross-checks, and aggregates AI-generated outputs before integration into user-facing workflows or critical decisions. G.O.V is designed to:

Reduce the likelihood of errors, hallucinations, and biases slipping into production.

Provide transparent, auditable safeguards for sensitive applications.

Enable scalable, automated quality control without relying exclusively on human reviewers.

At its core, G.O.V leverages redundancy, diversity, and structured verification to catch and correct issues that any single AI model or workflow might miss.

The G.O.V Process in Practice

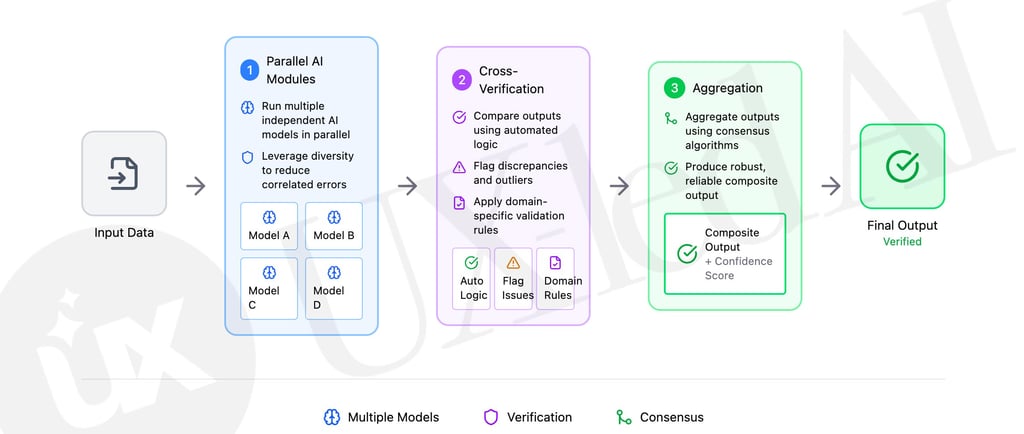

Implementing G.O.V involves three key steps:

1. Parallel AI Modules:

Run multiple, independent AI models (from different AI engines or with distinct architectures) in parallel on the same input.

Leverage diversity—different models will have varying failure modes, reducing correlated errors.

2. Cross-Verification:

Compare outputs across all models using automated logic and, where feasible, targeted human review.

Flag discrepancies, outliers, or confidence gaps for additional scrutiny.

Employ domain-specific rulesets and validation logic to check factual consistency and adherence to ethical standards.

3. Aggregation:

Aggregate the vetted outputs using consensus algorithms, weighted voting, or rule-based selection.

Produce a single, composite output that is robust, reliable, and accompanied by a confidence score and audit trail.

This structured approach transforms output vetting from an ad hoc, manual process into a scalable, repeatable workflow aligned with UX principles and real-world risk tolerance.

Example for applying G.O.V in Healthcare

Consider a clinical decision support tool generating patient summaries from electronic health records (EHRs). Here’s how G.O.V applies:

Parallel AI Modules: Patient data is sent to three language models—open-source, proprietary, and custom-trained on local notes.

Cross-Verification: Outputs are compared for consistency in diagnoses, medication lists, and key findings. Automated logic flags any discrepancies (e.g., one model suggests a diagnosis the others omit). A rules engine checks for guideline adherence and ethical red flags (e.g., inappropriate recommendations).

Aggregation: If all outputs align, the consensus summary is sent to the clinician. If discrepancies are detected, the system requests a targeted human review or applies a conservative aggregation rule (e.g., only include findings present in at least two outputs). The process is logged for auditability.

By cross-checking and aggregating, G.O.V reduces single-model risks and boosts clinician confidence in AI recommendations.

Example for applying G.O.V in Fintech

Imagine a financial risk assessment platform that generates loan eligibility reports using data from customer profiles, transaction histories, and credit scores. Here’s how G.O.V applies:

Parallel AI Modules: Financial data is processed simultaneously by three AI models—one open-source, one proprietary, and one custom-built for regional regulatory compliance.

Cross-Verification: The system compares outputs for consistency in creditworthiness ratings, flagged risk factors, and suggested loan terms. Automated logic identifies discrepancies (for example, if one model approves a loan that others decline). A rules engine checks for regulatory adherence and ethical concerns, such as potential bias in lending decisions or non-compliance with fair lending laws.

Aggregation: When all outputs agree, the consensus risk report is forwarded to the loan officer. In cases of disagreement, the system triggers a targeted human review, or applies a conservative aggregation—only findings present in at least two model outputs are included. All steps are logged to maintain an audit trail and support regulatory scrutiny.

By leveraging parallel review and aggregation, G.O.V minimizes the risks of single-model errors and enhances confidence in automated financial recommendations, supporting responsible decision-making in high-stakes fintech environments.

Making G.O.V the Standard for Responsible AI

As generative AI becomes foundational to products and services in sensitive domains, unchecked outputs pose significant risks. G.O.V provides a robust framework to address these risks, making output vetting a critical design priority. Adopting G.O.V helps organizations deliver safer AI, protect users, and maintain trust.

The call to action is clear: integrate Generative Output Vetting into your AI workflows now—especially where the stakes are highest. Don’t let the snowball effect of unchecked errors undermine the promise of AI or the safety of your users.

UX-led AI

© 2025. All rights reserved.